Research

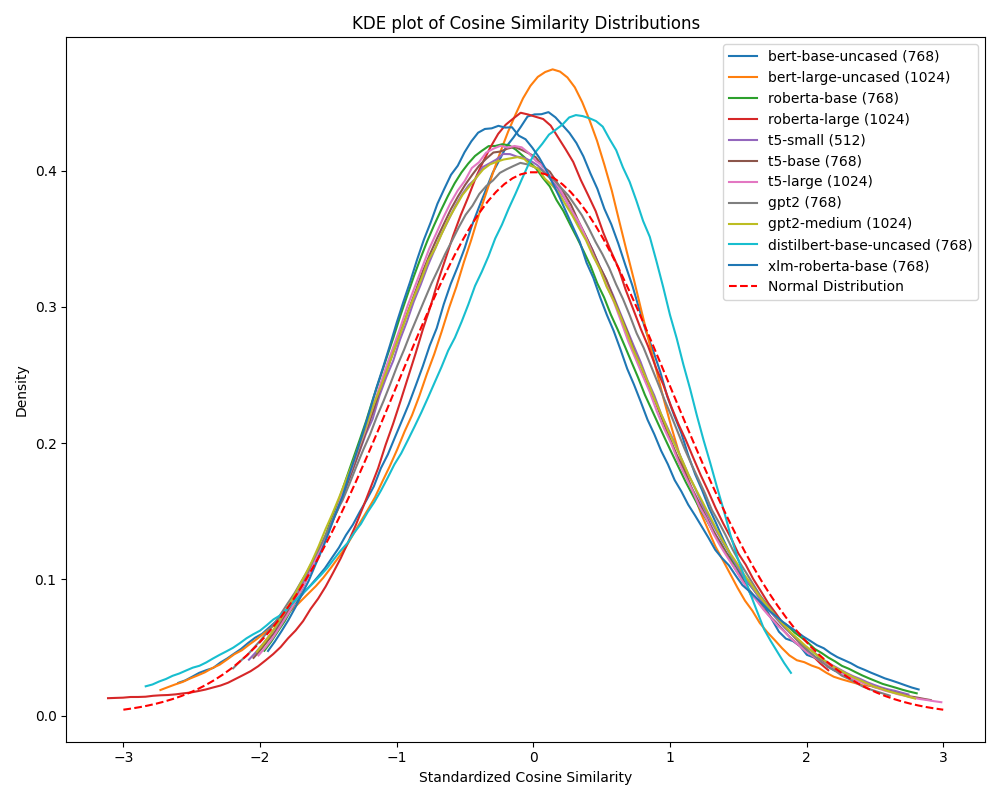

My current AI research is centered around two intertwined topics: (1) understanding the geometry of the latent/embedding spaces of Language models, and (2) the application of modern (Large) Language Models in the domain of Finnish healthcare. In understanding the geometry of the embeddings spaces of language models, my goals are similar to the topics of mechanistic interpretability, and I was e.g. very much inspired by Anthropics "Toy Models of Superposition" paper. In the healthcare applications side of things, I believe there is much humane benefit to be gained from intelligent systems that help clinical staff to focus more on patient care and interaction.

As a part of my goal of understanding "the geometry of language", I also try to find time to study linguistics. This has sprouted some research collaboration as well.

In my previous life in mathematics research, my interests were largely in the field of quasiconformal geometry, metric geometry and quantitative topology. My spesific research questions were focused on branched covers, quasiregular mappings and mappings of finite distortion between Euclidean domains. In particular, I was interested in the so called branch set of a mapping, along with the interplay of the structure of the branch set and the local & global behaviour of the mapping.